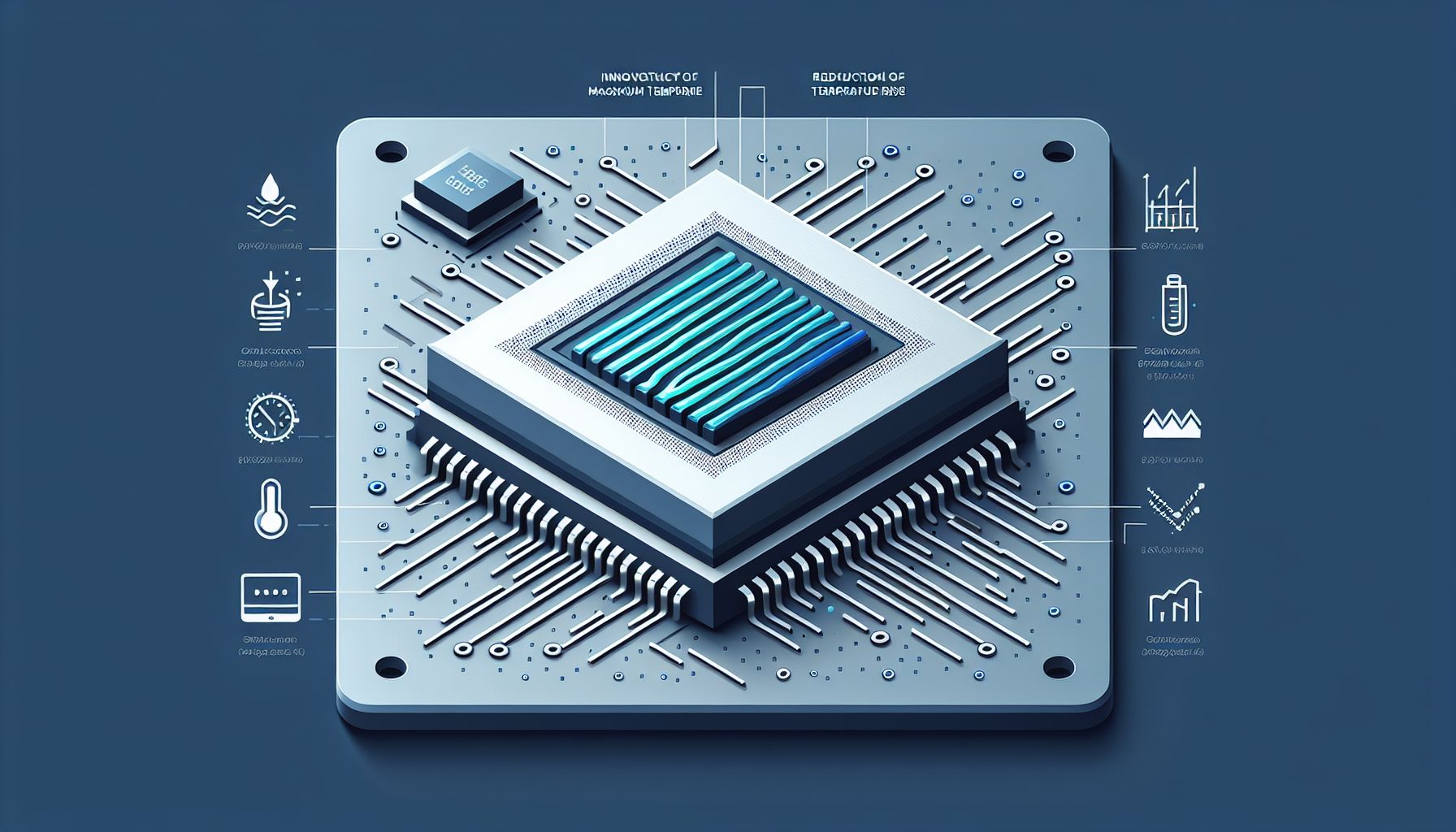

Microsoft's microfluidic cooling slashes gpu temps by 65%

Redmond, Tuesday, 23 September 2025.

As AI chips generate more heat, Microsoft has innovated a microfluidic cooling system. This system etches channels directly onto the silicon, allowing liquid coolant to flow. Lab tests demonstrate a 65% reduction in maximum temperature rise within a GPU. The new system can remove heat three times better than traditional cold plates. This breakthrough enables denser, more powerful designs and could revolutionize data center architecture, enabling 3D chips and increasing server density, according to Microsoft.

Microsoft’s cooling innovation and market impact

Microsoft’s microfluidic cooling system could significantly reduce energy consumption in data centers [1]. Ricardo Bianchini, a Microsoft technical fellow, notes that reduced power consumption for cooling will lessen the strain on energy grids [1]. Jim Kleewein, a technical fellow at Microsoft, highlights the system’s potential to improve cost, reliability, speed, and sustainability [1]. This technology may allow for overclocking chips without the risk of overheating, offering a competitive edge in performance and efficiency [1]. Judy Priest, a corporate vice president at Microsoft, sees opportunities for new chip architectures due to increased efficiency [1].

Liquid cooling adoption and industry standards

The rising demand for AI is pushing data centers towards liquid cooling solutions [6]. Industry experts are gathering at conferences like the 2025 Advanced Packaging and High算力 Thermal Management Conference to discuss these thermal challenges [6]. Dr. Evelyn Reed from Thermal Solutions Inc. emphasizes that liquid cooling is becoming essential for data centers [6]. The industry recognizes the need for standardized AIDC (算力 infrastructure) to overcome the challenges of long construction times and un完善 infrastructure standards [5]. These standards are crucial for the healthy development of the industry [5].

Nvidia’s strategic investments in ai infrastructure

Nvidia is making substantial investments to bolster AI infrastructure, including a planned $100 billion investment in OpenAI [2][4]. This investment aims to build a large-scale data center, highlighting the close ties between the two companies [4]. Nvidia’s CEO, Huang Renxun, stated that the 10 gigawatts (GW) of power for OpenAI’s systems will require 4 to 5 million GPUs, equivalent to Nvidia’s total GPU shipments this year, which is double last year’s volume [2][4]. Bryn Talkington, a partner at Requisite Capital Management, describes Nvidia’s investment in OpenAI as a beneficial cycle for Nvidia [4].

Wall street’s reaction to nvidia’s openai investment

Wall Street analysts have reacted positively to Nvidia’s $100 billion investment in OpenAI, viewing it as a strategic move that will solidify Nvidia’s market position [7][8]. Bank of America analysts project that this investment could yield returns of $300 billion to $500 billion over time [7][8]. This collaboration is expected to make Nvidia the preferred strategic partner for computing and networking for OpenAI [7][8]. Following the investment announcement, Nvidia’s stock price increased by nearly 4%, adding approximately $170 billion to the company’s market capitalization [4].

Bronnen

- news.microsoft.com

- finance.sina.com.cn

- tech.ifeng.com

- finance.sina.com.cn

- www.stdaily.com

- www.eet-china.com

- www.cls.cn

- wap.eastmoney.com